8.2. Ignorance pdfs: Indifference and translation groups#

Original version by Christian Forssén (Department of Physics, Chalmers University of Technology, Sweden) and Daniel Phillips (Department of Physics and Astronomy, Ohio University), Oct 23, 2019.

Minor updates by Dick Furnstahl, November, 2021.

Discrete permutation invariance#

Consider a six-sided die

How do we assign \(p_i \equiv p(X_i|I)\), \(i \in \{1, 2, 3, 4, 5, 6\}\)?

We do know \(\sum_i p(X_i|I) = 1\)

Invariance under labeling \(\Rightarrow p(X_i|I)=1/6\)

provided that the prior information \(I\) says nothing that breaks the permutation symmetry.

Location invariance#

Indifference to a constant shift \(x_0\) for a location parameter \(x\) implies that

in the allowed range.

Location invariance implies that

Provided that the prior information \(I\) says nothing that breaks the symmetry.

The pdf will be zero outside the allowed range (specified by \(I\)).

Scale invariance#

Indifference to a re-scaling \(\lambda\) of a scale parameter \(x\) implies that

in the allowed range.

Invariance under re-scaling implies that

Provided that the prior information \(I\) says nothing that breaks the symmetry.

The pdf will be zero outside the allowed range (specified by \(I\)).

This prior is often called a Jeffrey’s prior; it represents a complete ignorance of a scale parameter within an allowed range.

It is equivalent to a uniform pdf for the logarithm: \(p(\log(x)|I) = \mathrm{constant}\)

as can be verified with a change of variable \(y=\log(x)\), see lecture notes on error propagation.

Example: Straight-line model#

Consider the theoretical model

Would you consider the intercept \(\theta_0\) a location or a scale parameter, or something else?

Would you consider the slope \(\theta_1\) a location or a scale parameter, or something else?

Consider also the statistical model for the observed data \(y_i = y_\mathrm{th}(x_i) + \epsilon_i\), where we assume independent, Gaussian noise \(\epsilon_i \sim \mathcal{N}(0, \sigma^2)\).

Would you consider the standard deviation \(\sigma\) a location or a scale parameter, or something else?

Symmetry invariance#

In fact, by symmetry indifference we could as well have written the linear model as \(x_\mathrm{th}(y) = \theta_1' y + \theta_0'\)

We would then equate the probability elements for the two models

The transformation gives \((\theta_0', \theta_1') = (-\theta_1^{-1}\theta_0, \theta_1^{-1})\).

This change of variables implies that

where the (absolute value of the) determinant of the Jacobian is

In summary we find that \(\theta_1^3 p(\theta_0, \theta_1 | I) = p(-\theta_1^{-1}\theta_0, \theta_1^{-1}|I).\)

This functional equation is satisfied by

100 samples of straight lines with fixed intercept equal to 0 and slopes sampled from three different pdfs. Note in particular the prior preference for large slopes that results from using a uniform pdf.

The principle of maximum entropy#

Having dealt with ignorance, let us move on to more enlightened situations.

Consider a die with the usual six faces that was rolled a very large number of times. Suppose that we were only told that the average number of dots was 2.5. What (discrete) pdf would we assign? I.e. what are the probabilities \(\{ p_i \}\) that the face on top had \(i\) dots after a single throw?

The available information can be summarized as follows

This is obviously not a normal die, with uniform probability \(p_i=1/6\), since the average result would then be 3.5. But there are many candidate pdfs that would reproduce the given information. Which one should we prefer?

It turns out that there are several different arguments that all point in a direction that is very familiar to people with a physics background. Namely that we should prefer the probability distribution that maximizes an entropy measure, while fulfilling the given constraints.

It will be shown below that the preferred pdf \(\{ p_i \}\) is the one that maximizes

where the constraints are included via the method of Lagrange multipliers.

The monkey argument#

The monkey argument is a model for assigning probabilities to \(M\) different alternatives that satisfy some constraint as described by \(I\):

Monkeys throwing \(N\) balls into \(M\) equally sized boxes.

The normalization condition \(N = \sum_{i=1}^M n_i\).

The fraction of balls in each box gives a possible assignment for the corresponding probability \(p_i = n_i / N\).

The distribution of balls \(\{ n_i \}\) divided by \(N\) is therefore a candidate pdf \(\{ p_i \}\).

After one round the monkeys have distributed their (large number of) balls over the \(M\) boxes.

The resulting pdf might not be consistent with the constraints of \(I\), however, in which case it should be rejected as a possible candidate.

After many such rounds, some distributions will be found to come up more often than others. The one that appears most frequently (and satisfies \(I\)) would be a sensible choice for \(p(\{p_i\}|I)\).

Since our ideal monkeys have no agenda of their own to influence the distribution, this most favored distribution can be regarded as the one that best represents our given state of knowledge.

Now, let us see how this preferred solution corresponds to the pdf with the largest entropy. Remember in the following that \(N\) (and \(n_i\)) are considered to be very large numbers (\(N/M \gg 1\))

The logarithm of the number of micro-states, \(W\), as a function of \(\{n_i\}\) follows from the multinomial distribution (where we use the Stirling approximation \(\log(n!) \approx n\log(n) - n\) for large numbers, and there is a cancellation of two terms)

There are \(M^N\) different ways to distribute the balls.

The micro-states \(\{ n_i\}\) are connected to the pdf \(\{ p_i \}\) and the frequency of a given pdf is given by

Therefore, the logarithm of this frequency is

Substituting \(p_i = n_i/N\), and using the normalization condition finally gives

We note that \(N\) and \(M\) are constants so that the preferred pdf is given by the \(\{ p_i \}\) that maximizes

You might recognise this quantity as the entropy from statistical mechanics. The interpretation of entropy in statistical mechanics is the measure of uncertainty that remains about a system after its observable macroscopic properties, such as temperature, pressure and volume, have been taken into account. For a given set of macroscopic variables, the entropy measures the degree to which the probability of the system is spread out over different possible microstates. Specifically, entropy is a logarithmic measure of the number of micro-states with significant probability of being occupied \(S = -k_B \sum_i p_i \log(p_i)\), where \(k_B\) is the Boltzmann constant.

Why maximize the entropy?#

Information theory: maximum entropy=minimum information (Shannon, 1948).

Logical consistency (Shore & Johnson, 1960).

Uncorrelated assignments related monotonically to \(S\) (Skilling, 1988).

Consider the third argument. Let us check it empirically for the problem of hair color and handedness of Scandinavians. We are interested in determining \(p_1 \equiv p(L,B|I) \equiv x\), the probability that a Scandinavian is both left-handed and blonde. However, in this simple example we can immediately realize that the assignment \(p_1=0.07\) is the only one that implies no correlation between left-handedness and hair color. Any joint probability smaller than 0.07 implies that left-handed people are less likely to be blonde, and any larger value indicates that left-handed people are more likely to be blonde.

So unless you have specific information about the existence of such a correlation, you should not build it into the assignment of the probability \(p_1\).

Question: Can you show why \(p_1 < 0.07\) and \(p_1 > 0.07\) corresponds to left-handedness and blondeness being dependent variables?

Hint: The joint probability of left-handed and blonde is \(x\). Using the other expressions in the table, what is this joint probability if the probabilities of being left-handed and of being blonde are independent?

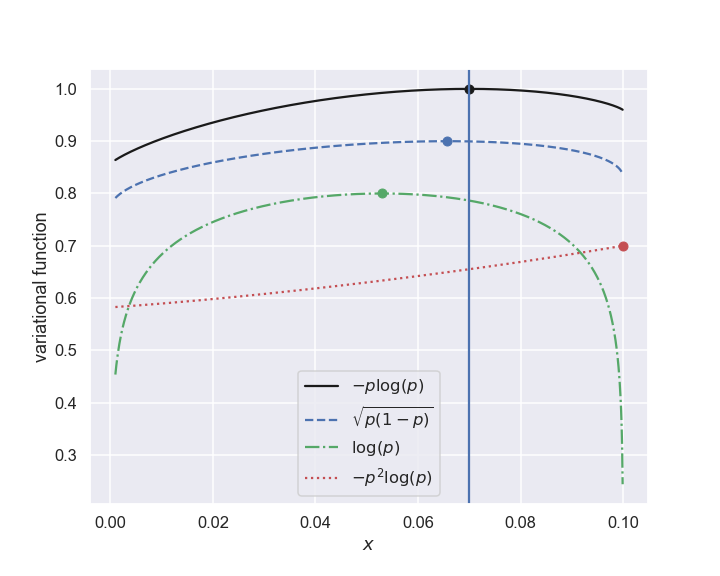

Let us now empirically consider a few variational functions of \(\{ p_i \}\) and see if any of them gives a maximum that corresponds to the uncorrelated assignment \(x=0.07\), which implies \(p_1 = 0.07, \, p_2 = 0.63, \, p_3 = 0.03, \, p_4 = 0.27\). A few variational functions and their prediction for \(x\) are shown in the following table.

Variational function |

Optimal \(x\) |

Implied correlation |

|---|---|---|

\(-\sum_i p_i \log(p_i)\) |

0.070 |

None |

\(\sum_i \log(p_i)\) |

0.053 |

Negative |

\(-\sum_i p_i^2 \log(p_i)\) |

0.100 |

Positive |

\(-\sum_i \sqrt{p_i(1-p_i)}\) |

0.066 |

Negative |

The assignment based on the entropy measure is the only one that respects this lack of correlations.

Four different variational functions \(f\bigl(\{ p_i \}\bigr)\). The optimal \(x\) for each one is shown by a circle. The uncorrelated assignment \(x=0.07\) is shown by a vertical line.

Continuous case#

Return to monkeys, but now with different probabilities for each bin. Then

which is often known as the Shannon-Jaynes entropy, or the Kullback number, or the cross entropy (with opposite sign).

Jaynes (1963) has pointed out that this generalization of the entropy, including a Leqesgue measure \(m_i\), is necessary when we consider the limit of continuous parameters.

In particular, \(m(x)\) ensures that the entropy expression is invariant under a change of variables \(x \to y=f(x)\).

Typically, the transformation-group (invariance) arguments are appropriate for assigning \(m(x) = \mathrm{constant}\).

However, there are situations where other assignments for \(m\) represent the most ignorance. For example, in counting experiments one might assign \(m(N) = M^N / N!\) for the number of observed events \(N\) and a very large number of intervals \(M\).

Derivation of common pdfs using MaxEnt#

The principle of maximum entropy (MaxEnt) allows incorporation of further information, e.g. constraints on the mean, variance, etc, into the assignment of probability distributions.

In summary, the MaxEnt approach aims to maximize the Shannon-Jaynes entropy and generates smooth functions.

Mean and the Exponential pdf#

Suppose that we have a pdf \(p(x|I)\) that is normalized over some interval \([ x_\mathrm{min}, x_\mathrm{max}]\). Assume that we have information about its mean value, i.e.,

Based only on this information, what functional form should we assign for the pdf that we will now denote \(p(x|\mu)\)?

Let us use the principle of MaxEnt and maximize the entropy under the normalization and mean constraints. We will use Lagrange multipliers, and we will perform the optimization as a limiting case of a discrete problem; explicitly, we will maximize

Setting \(\partial Q / \partial p_j = 0\) we obtain

With a uniform measure \(m_j = \mathrm{constant}\) we find (in the continuous limit) that

The normalization constant (related to \(\lambda_0\)) and the remaining Lagrange multiplier, \(\lambda_1\), can easily determined by fulfilling the two constraints.

Assuming, e.g., that the normalization interval is \(x \in [0, \infty[\) we obtain

The constraint for the mean then gives

So that the properly normalized pdf from MaxEnt principles becomes the exponential distribution

Variance and the Gaussian pdf#

Suppose that we have information not only on the mean \(\mu\) but also on the variance

The principle of MaxEnt will then result in the continuum assignment

Assuming that the limits of integration are \(\pm \infty\) this results in the standard Gaussian pdf

This indicates that the normal distribution is the most honest representation of our state of knowledge when we only have information about the mean and the variance.

Notice.

These arguments extend easily to the case of several parameters. For example, considering \(\{x_k\}\) as the data \(\{ D_k\}\) with error bars \(\{sigma_k\}\) and \(\{\mu_k\}\) as the model predictions, this allows us to identify the least-squares likelihood as the pdf which best represents our state of knowledge given only the value of the expected squared-deviation between our predictions and the data

If we had convincing information about the covariance \(\left\langle \left( x_i - \mu_i \right) \left( x_j - \mu_j \right) \right\rangle\), where \(i \neq j\), then MaxEnt would assign a correlated, multivariate Gaussian pdf for \(p\left( \{ x_k \} | I \right)\).

Counting statistics and the Poisson distribution#

The derivation, and underlying arguments, for the binomial distribution and the Poisson statistic based on MaxEnt is found in Sivia, Secs 5.3.3 and 5.3.4.